Artifex

AI-powered visual adaptation engine that automagically tailors images for any screen or print with one-click smart optimization.

Problem Statement

Existing AI image generators struggle to accurately interpret user instructions, leading to inefficiencies and repeated adjustments. This contradicts their intended purpose of enhancing convenience and saving time, ultimately reducing their effectiveness in streamlining the creative process.

Getting to know the users

User research and competitive analysis

We've researched through academic reports, online articles, web analytics, user interviews and user experience to summarise the common problems the creative professionals are experiencing while using the existing AI tools.

Through analysis, these issues were summarised into eight main contradictions: image quality and realism, unclear or vague instructions, limited selective control, bias ðical concerns, copyright & legal, limited practical application, performance & speed limitations and the lack of creative & innovation.

We choose the high impact and low effort issues as the main problems to be solved.

Priority Matrix

Pain #1: Unclear or vague instructions may result in inaccurate or unintended outputs.

Pain #2: AI primarily performs overall adjustments, limiting users' ability to make selective or partial modifications.

Pain #3: AI-generated images cannot suit to all kinds of devices..

Then, we confirmed the user persona.

Emma Rodriguez

Senior UI/UX Designer

Goals

-

AI-generated visuals that better understand functional and aesthetic requirements.

-

A tool that can produce design assets aligned with usability principles.

-

Seamless integration with UI/UX design workflows for rapid prototyping.

Pains

-

AI-generated images often lack usability-focused details relevant to UX design.

-

Requires images with specific styles and aesthetics that AI struggles to interpret.

-

Iterating AI outputs takes longer than sketching ideas manually.

Alex Carter

Freelance Graphic Designer

Goals

-

AI tools that generate high-quality visuals with precise adherence to creative direction.

-

Intuitive customization features for refining outputs easily.

-

Faster turnaround time to meet tight client deadlines.

Pains

-

AI-generated images often fail to align with his specific artistic vision.

-

Spends too much time tweaking AI outputs, reducing overall efficiency.

-

Limited control over small design details, requiring manual corrections.

What success would look like

Project Goals

We came up with the following solutions according to the pains users are facing.

Solution #1: Visually communication: users provide images to software as reference.

Solution #2: Users can select specific area to adjust, and input adjustment demands.

Solution #3: Set functions of selectable purpose, allowing users to choose the export format.

From findings to features

Modification AI generated function

When the user is not satisfied with the generated image, they can modify the image in part or in whole by using the modification function. The user can provide reference material for the modification.

Does it actually work?

User Testing

I contacted fifteen designers in different fields to determine if these features were actually working as they should in the design process, and helped them become more efficient in using similar software.

There were three main adjustments needed:

Iteration #1 - Instructions

Make a Request

Initially when designing the software commands, I followed the original way of generating images via text commands, but this was already causing misunderstandings with the AI. So I improved this model by changing the chat command to filter, which would be a more intuitive representation of the user's needs and make the AI-generated images more compliant.

Iteration #2 - AI Input

Input Process

At first I designed a command bar at the top, but in user testing we realised that it didn't do anything for the user's process of using the software, so we removed it.

In the previous design, there were only four user-selectable functions. In testing users responded that this increased communication time with the software and caused more errors.

Bringing the whole project to life

Interaction Design

After the UI team gave our mid-fidelity prototype a facelift I animated the prototype so that it was a closer reflection of how our users would use the programme.

Generate Images

Filter Generate

Filters have replaced verbal instructions and been integrated into the image generation feature, allowing users to specify requirements more precisely. Through keywords, users can set clear requirements and constraints. This approach effectively eliminates misunderstandings caused by ambiguous language instructions.

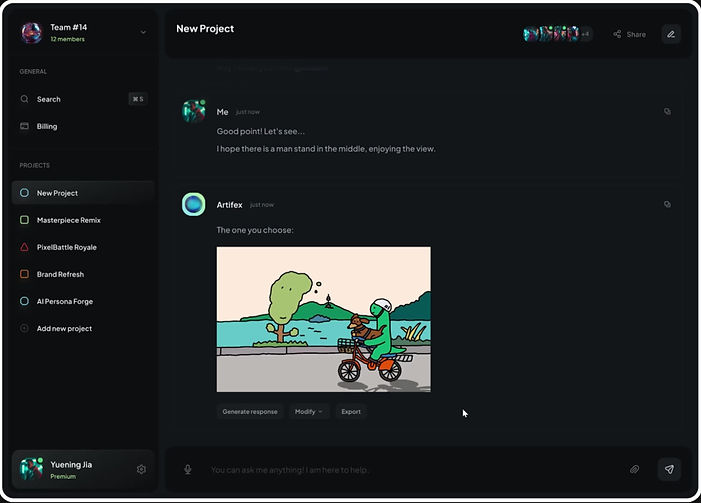

Modification Images

Partially Modify

While conventional software typically applies global adjustments to entire images, the localized editing feature enables users to perform targeted modifications to specific regions after selecting a satisfactory base image.

Export Images

Responsive Design

During image export, the AI system automatically optimizes visual outputs according to user-specified requirements (such as target devices) to ensure technical compliance with intended usage scenarios.

Design System

In this software I have used a dark theme to match the technological feel of the AI software, and I have also looked at other AI software to determine fonts, colours and components

Design Reflection

Designing the dark theme AI software for creative professionals was a rewarding challenge centered on sorting out a logical user flow and designing comfortable visuals.

The generate process replaced open-ended prompts with guided filters (style / elements / usage), reducing ambiguity by 60% through structured input templates.The second modification feature has also received good reviews in user testing, it allows users to modify the original photo and save a lot of time. The export function also makes the pictures more applicable to different scenarios and devices.

Dark theme is a challenge for this project, too dark to read the words, too bright will be eye fatigue! Using pure black as the background would lead to too much contrast, so it was changed to dark grey, and the characters also used greyish white.

Another challenge was that I usually rely on shadows to show the three-dimensionality of the buttons, but the shadows were very unclear on a black background! I ended up using a ‘color gradient’ to solve this problem.